A Quick On-Ramp to Chatbots with No Coding Required

This text was initially revealed on medium.com.

Posted on behalf of Bob Chesebrough, Options Architect, Intel Company

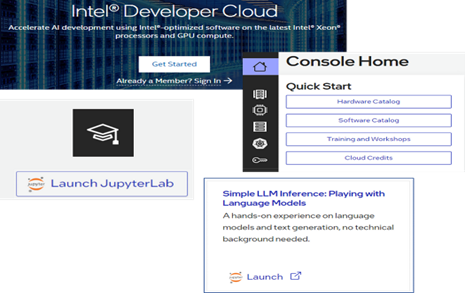

I goal to reveal a quick on-ramp to taking part in with this chatbot primarily based on the 7-billion parameter model of the Mistral basis mannequin. The neural-chat-7b mannequin was fine-tuned on Intel® Gaudi® 2 AI accelerators utilizing a number of Intel AI software program libraries. I’m going to point out you play with this mannequin with prebaked code on the Intel® Developer Cloud.

In the event you observe these directions, you possibly can register for the standard free account on Intel Developer Cloud and begin taking part in with a Jupyter Pocket book preconfigured for numerous massive language fashions (LLMs) akin to:

- Author/camel-5b-hf

- openlm-research/open_llama_3b_v2

- Intel/neural-chat-7b-v3–1

- HuggingFaceH4/zephyr-7b-beta

- tiiuae/falcon-7b

You are able to do all this with out writing a single line of code. Merely kind out a immediate and begin taking part in. Powered by Intel® Knowledge Heart GPU Max 1100s, this pocket book gives a hands-on expertise that doesn’t require deep technical data.

Whether or not you’re a scholar, author, educator, or just interested in AI, this information is designed for you. LLMs have a variety of purposes, however experimenting with them may also be enjoyable. Right here, we’ll use some easy pre-trained fashions to discover textual content technology interactively. Able to strive it out? Let’s arrange our surroundings and begin exploring the world of textual content technology with LLMs!

The quick abstract of your on-ramp directions is listed right here and proven within the screenshots beneath:

- Go to the Intel Developer Cloud.

- Click on the “Get Began” button.

- Subscribe to the “Normal — Free” service tier and full your cloud registration.

- To begin up a free Jupyter Pocket book session that makes use of the most recent Intel CPUs and GPUs, click on the “Coaching and Workshops” icon.

- Click on “Easy LLM Inference: Enjoying with Language Fashions.” This can launch a prepopulated Jupyter Pocket book (simple_llm_inference.ipynb).

- Run all cells.

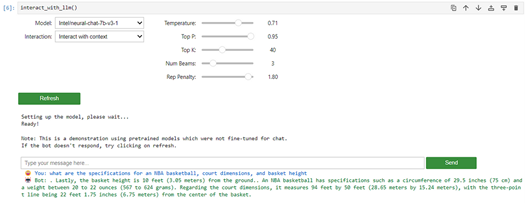

- Choose the mannequin and choices to strive.

- Choose the choices and textual content immediate you need to check out. For choices, I counsel selecting a mannequin akin to Intel utilizing “with_context” and a immediate much like “What are the specs for an NBA basketball, court docket dimensions, and basket top.”

Interactive Immediate

The interactive nature of the pocket book makes it straightforward to enter a immediate and generate a response:

Generative AI Accuracy Reminder

Chatbots akin to these are identified to supply inaccurate outcomes at instances and shouldn’t be relied upon for correct or reliable solutions. For instance, once I ran the above pocket book with the “With out Context” choice and the immediate “What are the specs for an NBA basketball, court docket dimensions, and basket top,” the fashions returned nonsensical solutions, i.e.:

“Bot: An NBA basketball has a diameter of 12 ft (3.7 meters), a top of three ft (0.91 meters) above the bottom, and a width of 9 ft (2.7 meters).”

The bot generated the next response once I used the “With Context” choice:

“Bot: . The specs for an official NBA basketball embody a 29.5 inches (75 cm) circumference and a weight starting from 20 to 22 ounces (567 to 624 grams). The basketball hoop’s rim top is 10 ft (3.05 meters) from the bottom. Relating to the court docket dimensions, it measures 94 ft (28.65 meters) in size and 50 ft (15.24 meters) in width.”

Whereas the final response just isn’t absurdly unsuitable, to me, a layman, it must be double-checked with official NBA specs to make sure accuracy!

Name to Motion

Check out this no-code method to experimenting with chatbot fashions utilizing the usual free Intel Developer Cloud account and the ready-made Jupyter Pocket book. Attempt your hand with totally different 7B parameter fashions and prompts of your personal.

We encourage you additionally to take a look at and incorporate Intel’s different AI/ML Framework optimizations and end-to-end portfolio of instruments into your AI workflow and be taught in regards to the unified, open, standards-based oneAPI programming mannequin that types the muse of Intel’s AI Software program Portfolio that will help you put together, construct, deploy, and scale your AI options.