I lately hosted the webinar Immediate-Pushed Efficiencies for LLMs, the place my visitor Eduardo Alvarez, Senior AI Options Engineer at Intel, unpacked some strategies for prompt-driven efficiency efficiencies. These strategies must ship the next enterprise outcomes.

- Serving extra customers with high quality responses the primary time.

- Present greater ranges of consumer assist whereas sustaining knowledge privateness.

- Bettering operational effectivity and value with immediate economization.

Eduardo offered three strategies obtainable to builders:

- Immediate engineering consists of various immediate strategies to generate higher-quality solutions.

- Retrieval-augmented technology improves the immediate with further context to scale back the burden on finish customers.

- Immediate economization strategies enhance the effectivity of information motion by way of the GenAI pipeline.

Efficient prompting can scale back the variety of mannequin inferencing (and prices) whereas enhancing the standard of the outcomes extra shortly.

Immediate engineering: Bettering mannequin outcomes

Let’s begin with a immediate engineering framework for LLMs: Studying Primarily based, Inventive Prompting, and Hybrid.

The Studying Primarily based approach encompasses one shot and few shot prompts. This method provides the mannequin context and teaches it with examples within the immediate for the mannequin to be taught. Zero-shot immediate is when the immediate asks the mannequin data it has already been educated on. A one-shot or few-shot prompting gives context, educating the mannequin new data to obtain extra correct outcomes from the LLM.

Below the Inventive Prompting class, strategies comparable to unfavourable prompting or iterative prompting can ship extra correct responses. Adverse prompting gives a boundary for the mannequin response, whereas iterative prompting gives follow-up prompts that enable the mannequin to be taught over the sequence of prompts.

The Hybrid Prompting method might mix any or the entire above.

Some great benefits of these strategies include the caveat that they require customers to have the experience to know these strategies and supply the context to supply high-quality prompts.

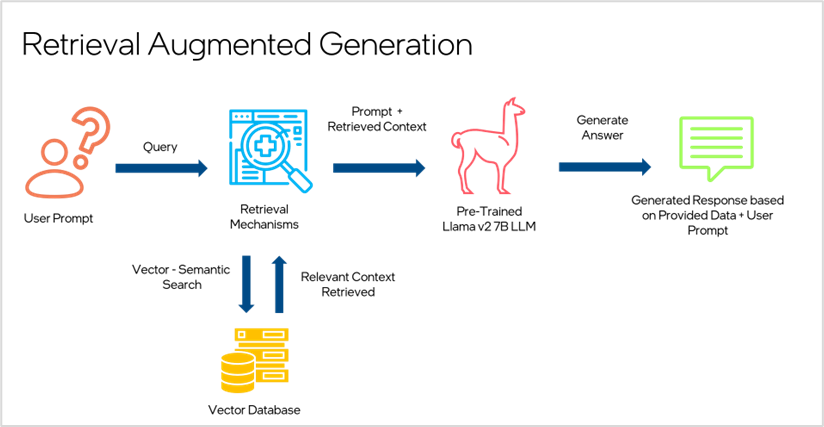

Retrieval augmented technology: Improve accuracy with firm knowledge

LLMs are often educated on the overall corpus of web knowledge, not knowledge particular to your online business. Including enterprise knowledge with retrieval augmented technology (RAG) into the immediate of LLM workflow will ship extra related outcomes. This workflow consists of embedding enterprise knowledge right into a vector database for immediate context retrieval; the immediate and the retrieved context are then despatched to the LLM to generate the response. RAG provides you the good thing about your knowledge within the LLM with out retraining the mannequin, so your knowledge stays personal, and also you save on further compute coaching prices.

Immediate economization: Delivering worth whereas saving cash

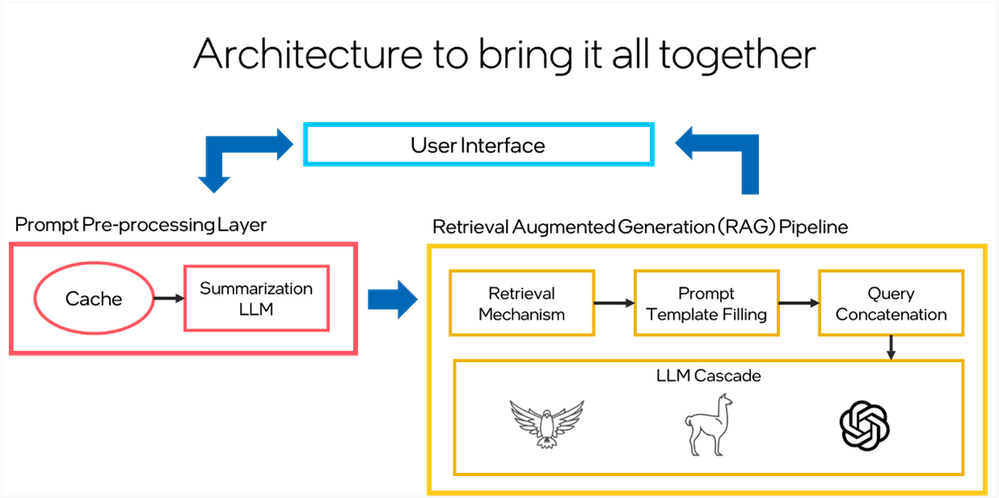

The final approach is targeted on totally different immediate strategies to economize on mannequin inferencing.

- Token summarization makes use of native fashions to scale back the variety of tokens per consumer immediate handed to the LLM service, which reduces prices for APIs that cost on a per-token foundation.

- Completion caching shops solutions to generally requested questions in cache in order that they don’t must occupy inference assets to be generated every time they’re requested.

- Question concatenation combines a number of queries right into a single LLM submission to scale back the overhead that accumulates per-query foundation, comparable to pipeline overhead and prefill processing.

- LLM cascades run queries first on easier LLMs and scores them for high quality, escalating to bigger, dearer fashions just for people who require it. This method reduces the common compute necessities per question.

Placing all of it collectively

In the end, mannequin throughput is proscribed by the quantity of compute reminiscence and energy. But it surely’s not nearly throughput, accuracy and effectivity are key to affecting generative AI outcomes. LLM immediate structure is usually a mixture of the above strategies personalized to your online business wants.

For a extra detailed dialogue on immediate engineering and efficiencies, watch the webinar right here.

All in favour of studying extra about AI fashions and generative AI? Join a free trial on Intel Developer Cloud.